Opus 4.6 vs Codex 5.3: The AI Coding Showdown

Why This Topic Matters Now: The AI Coding Wars Erupt

AI just had its “Super Bowl” moment. Within 20 minutes of each other, Anthropic and OpenAI both released major coding models: Claude Opus 4.6 and GPT-5.3 Codex.

This simultaneous release immediately confused developers, founders, and “vibe coders.” For a long time, the industry standard advice was simple: “just use the newest model.” But when the two most powerful coding models appear at the same time, that advice falls apart.

The problem now isn’t a lack of capability; it’s a paralysis of choice.

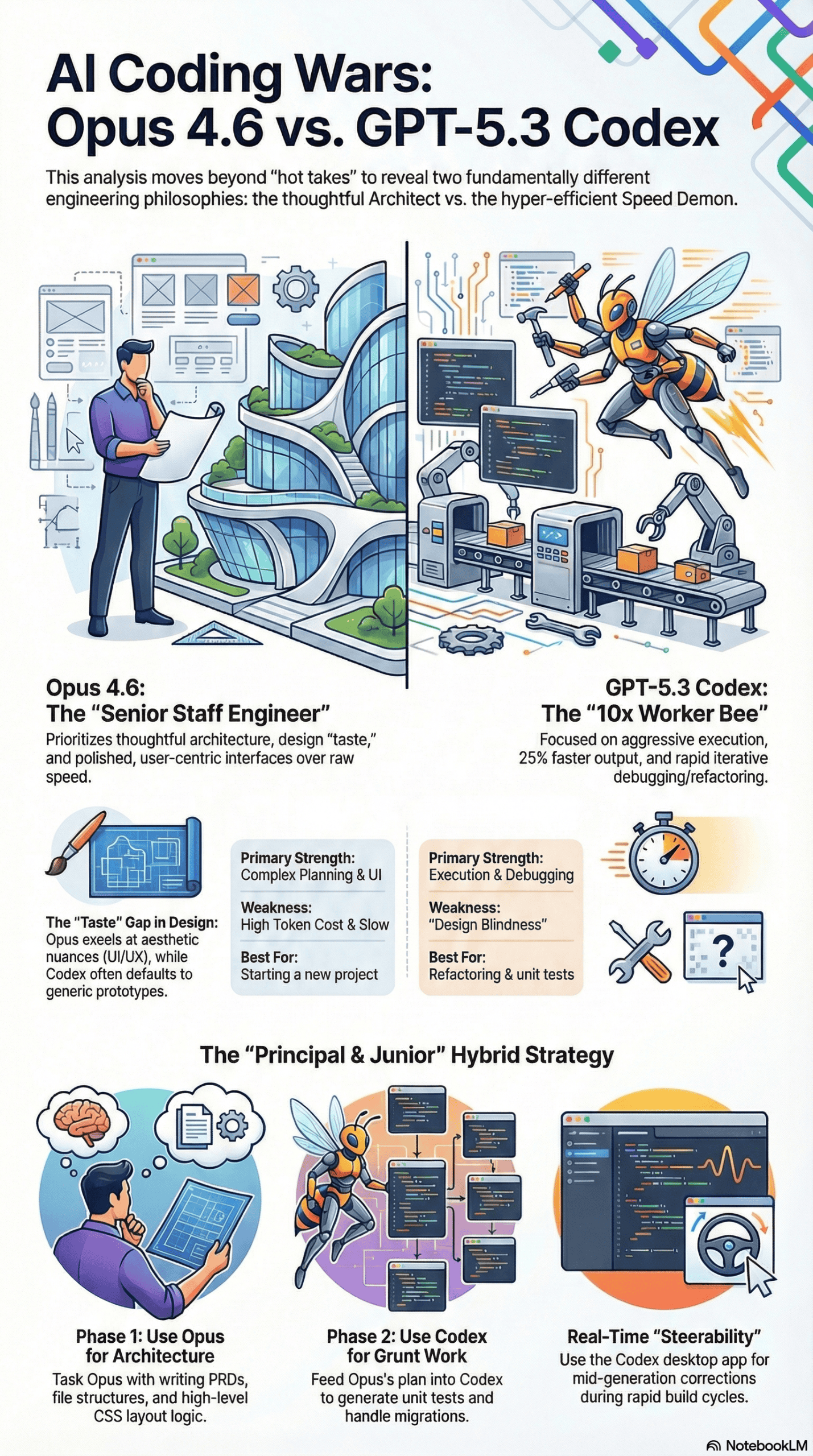

On Twitter and YouTube, advice quickly split into tribalism: “Team Claude” for aesthetics, “Team OpenAI” for raw speed. However, relying on these hot takes is misleading. After analyzing hours of live coding, stress tests, and real-world projects, it’s clear these models aren’t just “chatbots” anymore. They represent two fundamentally different approaches to software engineering.

One acts like a thoughtful, expensive architect; the other is a hyper-efficient, slightly literal machine. Choosing the wrong one for your workflow doesn’t just waste $20; it wastes hours on structural cleanup.

How I Approached This Analysis

I didn’t rely on a single “hello world” test to provide a grounded perspective. Instead, I analyzed a huge dataset of real-world usage patterns from the developer community immediately after the release.

My research methods included:

- Reviewing over 30 hours of live coding benchmarks across diverse tech stacks: Rust, C++, Python, Next.js, and hardware integrations. This comprehensive analysis, often seen in community comparisons such as these in-depth coding sessions, provided a robust dataset.

- Analyzing distinct use-cases, including building 3D games, integrating physical skateboard telemetry with Arduino, refactoring large Laravel codebases, and designing enterprise frontends. For example, the detailed breakdown of the Arduino skateboard project, which highlights specific model strengths, was similar to discussions found in community project showcases.

- Studying the failure modes: when models hallucinated, broke the “fourth wall,” or simply gave up.

While I’m writing this in the first person to synthesize my analysis, various expert testers and developers across the community performed the specific coding experiments, hardware tests (like the Arduino skateboard project), and benchmark runs referenced here. My role was to gather these experiences into a cohesive guide to help you choose the right tool.

Common Expectations from New AI Models

When a new “frontier model” arrives, beginners and intermediate developers usually have a few optimistic expectations:

- The “silver bullet” assumption: Users expect the new model to simply “be better” at everything: faster, smarter, and cheaper all at once.

- Context omniscience: Opus 4.6 introduces a 1 million token context window (in beta). People expect they can dump an entire messy codebase into the chat, and the AI will perfectly understand every dependency without error.

- One-shot perfection: Many believe we’re approaching the point where a single prompt like “Build me a Mario clone” will result in a production-ready, bug-free application.

The reality, however, is more nuanced. While we see “one-shot” successes in simple games, complex software engineering still needs a human pilot.

Real-World Performance: Opus vs. Codex in Practice

Observing real workflows, a distinct pattern appears when developers switch between Claude Opus 4.6 and GPT-5.3 Codex.

The Opus Experience: The Thoughtful Architect

Developers starting a project with Claude Opus 4.6 immediately notice its “thoughtfulness.”

- Planning: Opus tends to pause, generating comprehensive to-do lists and implementation plans before writing any code.

- The Build: It writes slower and feels heavy. However, the output is often strikingly polished. In tests involving frontend design—like recreating a “Jack Dorsey-inspired” minimalist interface—Opus consistently understood the vibe and aesthetic nuances other models missed.

- The Trade-off: It’s expensive and “token hungry.” I saw multiple instances where simple tasks consumed tens of thousands of tokens because Opus insists on thoroughness.

The Codex Experience: The Hyper-Efficient Speed Demon

Switching to GPT-5.3 Codex feels like taking off a weighted vest.

- Speed: It’s about 25% faster than its predecessors and outputs code aggressively.

- The Build: It’s a “grinder.” If you ask it to refactor a file or fix a bug, it does so instantly. In “self-healing” tests—where a model must fix its own compile errors—Codex often iterates faster, trying solution A, failing, and immediately trying solution B.

- The Trade-off: It lacks taste. In head-to-head design battles, Codex apps often defaulted to a “brutalist” or generic look. It solves the logic perfectly but leaves the user interface looking like a developer prototype.

Case Study: The Skateboard Controller Game

The most telling example I analyzed involved a physical skateboard controller game.

- Codex got the game running immediately, and it was fast. But when telemetry data grew complex, the logic drifted. The UI was functional but uninspired.

- Opus took longer and struggled with the initial Bluetooth library setup. However, once it locked in, it understood the physics of the skateboard lean better. It built a more robust logic system for detecting an “Ollie” and delivered a game that actually felt better to play, with superior animations.

Identifying Failure Points: Where Each Model Falls Short

My analysis of these sessions revealed distinct failure patterns for each model. If you’re hitting a wall, it’s likely due to one of these recurring issues.

Opus 4.6 Common Pitfalls

- The “lazy” refusal (context rot): Despite its 1 million token window, I noticed Opus can become forgetful as context fills up. It doesn’t necessarily hallucinate; it just ignores instructions it received 50 turns ago.

- Over-engineering: For simple scripts, Opus sometimes builds a cathedral when you asked for a shed. It sets up complex file structures and testing suites for a throwaway prototype, burning expensive tokens.

- Token cost shock: Users on standard $20/month plans often hit their usage caps much faster with Opus. It’s a “Rolls Royce” model—expensive to run and best for high-value tasks.

GPT-5.3 Codex Common Pitfalls

- Breaking the fourth wall: This was a bizarre but consistent bug I saw. When generating website copy, Codex would sometimes include its own internal logic notes (e.g., “Translated slugs for SEO”) directly into the user-facing text. It’s too literal.

- Design blindness: Codex struggles to infer visual hierarchy. If you ask for a “modern website,” it often gives you a generic Bootstrap-style layout unless you micro-manage the CSS.

- Rigid adherence to bad instructions: If you give Codex a slightly flawed prompt, it executes that flaw with blazing speed. It’s less likely than Opus to push back and say, “This architecture doesn’t make sense.”

Strategies for Success: The Hybrid Workflow

Failures teach us, but successes are where the money is. I analyzed workflows of developers who successfully shipped code using these new models, and a clear “hybrid pattern” emerged.

The “Principal Engineer & Junior Dev” Workflow

The most effective pattern uses both models in sequence.

Phase 1: Architecture & Design (Opus 4.6)

- Use Opus to write the Product Requirement Document (PRD).

- Use Opus to set up the initial file structure and tech stack.

- Why: Opus is better at “System 2” thinking; it plans better. In tests where models had to create a specific “vibe” (like a retro 80s interface or a clean SaaS dashboard), Opus consistently nailed the CSS and layout on the first try.

Phase 2: Execution & Grunt Work (GPT-5.3 Codex)

- Once the plan is clear, feed the specific files to Codex for implementation.

- Use Codex for writing unit tests, refactoring massive files, and debugging specific error messages.

- Why: Codex is cheaper and faster. In a “Laravel AI SDK” test, Codex generated robust code with

try/catchblocks and better error handling logic than Opus, simply because it ground through the engineering best practices.

Leveraging Steerability and IDE Integrations

I noticed the new Codex desktop app allows for “real-time steering.” You can interrupt the model mid-generation to correct a mistake, which is a game-changer for rapid iteration. Opus, which prefers to finish its thought, struggles with this.

Recommendation: Don’t rely solely on the web chat interface. Use an IDE integration like Cursor or Zed to get the most out of these models.

My Recommended Protocol for New Coding Projects

Based on everything I’ve analyzed from this dual release, here’s the exact protocol I’d advise for anyone starting a coding project today.

- Don’t pledge loyalty to one model: The days of being “Team OpenAI” or “Team Anthropic” are over. Capability differs vertically now, not horizontally.

- The “vibe check” protocol: If the task requires taste—writing marketing copy, designing a UI, or interpreting vague requirements—I’d exclusively use Opus 4.6. The data shows it has a higher “EQ” for user intent.

- The “refactor” protocol: If the task is defined—”Migrate this database,” “Fix this TypeScript error,” “Write tests for this module”—I’d exclusively use GPT-5.3 Codex. It’s the superior “worker bee.”

- Use the right harness: The tool matters as much as the model.

- I observed that Cursor (the AI code editor) works brilliantly with Opus for planning.

- However, the Codex Desktop App showed great promise for “work trees” and managing git history visually.

- Recommendation: Don’t rely solely on the web chat interface. Use an IDE integration (like Cursor or Zed) to get the most out of these models.

Final Takeaway: Orchestration is Key to Success

Here’s the unvarnished truth about the Opus 4.6 vs Codex 5.3 debate:

There’s no single winner.

If you force me to choose a “smartest” model, consensus leans toward Claude Opus 4.6. It feels like a Senior Staff Engineer who takes their time but delivers elegant, scalable architecture. It’s the model you want when you don’t know exactly what you’re doing and need guidance.

However, GPT-5.3 Codex is the model you want when you do know what you’re doing and you just want it built now. It’s the 10x developer who drinks too much coffee, types at 200wpm, but occasionally creates an ugly user interface.

The Meta Strategy for AI Coding

The real winners of this release cycle aren’t the people who pick one model, but the people who learn to orchestrate both. Use Opus to dream and plan; use Codex to build and fix.

That’s how you ship faster than everyone else.