Claude Opus 4.6: An Analyst’s Honest Review of the “Smartest” Model

Why Claude Opus 4.6 Matters Now

The release of Claude Opus 4.6 marks a significant shift in how we interact with AI. We’re moving beyond simple “chatbots” toward autonomous “agent teams.” While headlines often highlight higher benchmarks and massive context windows, the practical reality is far more nuanced.

For developers and power users, the confusion is palpable. You’re asked to pay a premium price—significantly higher than competitors—for a model that some early testers describe as “slower” and “more robotic.” Yet, it also promises to resolve “context rot” in long-term coding projects and enables the creation of entire teams of agents working in parallel.

My analysis aims to cut through the marketing hype. If you’re deciding whether to invest your API credits in Opus 4.6 or stick with more affordable alternatives like Sonnet or GPT-4o, understanding the trade-offs between “raw intelligence” and “practical usability” is crucial.

How This Analysis Was Constructed

To provide a comprehensive review, I synthesized observations from hours of hands-on testing and deep-dive breakdowns by expert users. These individuals had either early access or immediate day-one experience with the model. My analysis draws from:

- Live Coding Sessions: Observing the model develop games, applications, and presentations in real-time.

- Benchmark Reviews: Examining performance data from Box AI, GPQA, and internal “Humanity’s Last Exam” scores.

- Security & Architecture Audits: Reviewing its capabilities in handling large codebases and identifying security vulnerabilities.

- Comparative Testing: Pitting Opus 4.6 against GPT 5.2/5.3 and previous Claude versions.

While I haven’t personally spent $20,000 on API credits to build a Linux compiler, I have meticulously studied the logs of those who did. This review represents a synthesis of lived technical experience from the bleeding edge of AI development.

What Users Expect from a “Smartest” AI Model

When a model is marketed as “the smartest AI coding model ever made,” expectations naturally skyrocket. Common user expectations include:

- The “Speed” Trap: Most users anticipate that a newer model will be inherently faster and more responsive.

- The “Magic Wand”: Beginners often expect that a 1 million token context window means they can upload an entire library of books and achieve perfect recall instantly.

- The “Vibe” Upgrade: Users of previous Opus models often expect the same “human-like,” witty personality, simply enhanced with greater intelligence.

The prevailing expectation is a linear upgrade: faster, cheaper, and unequivocally better.

The Reality of Claude Opus 4.6 in Practice

The actual experience of using Opus 4.6 often diverges from the initial hype.

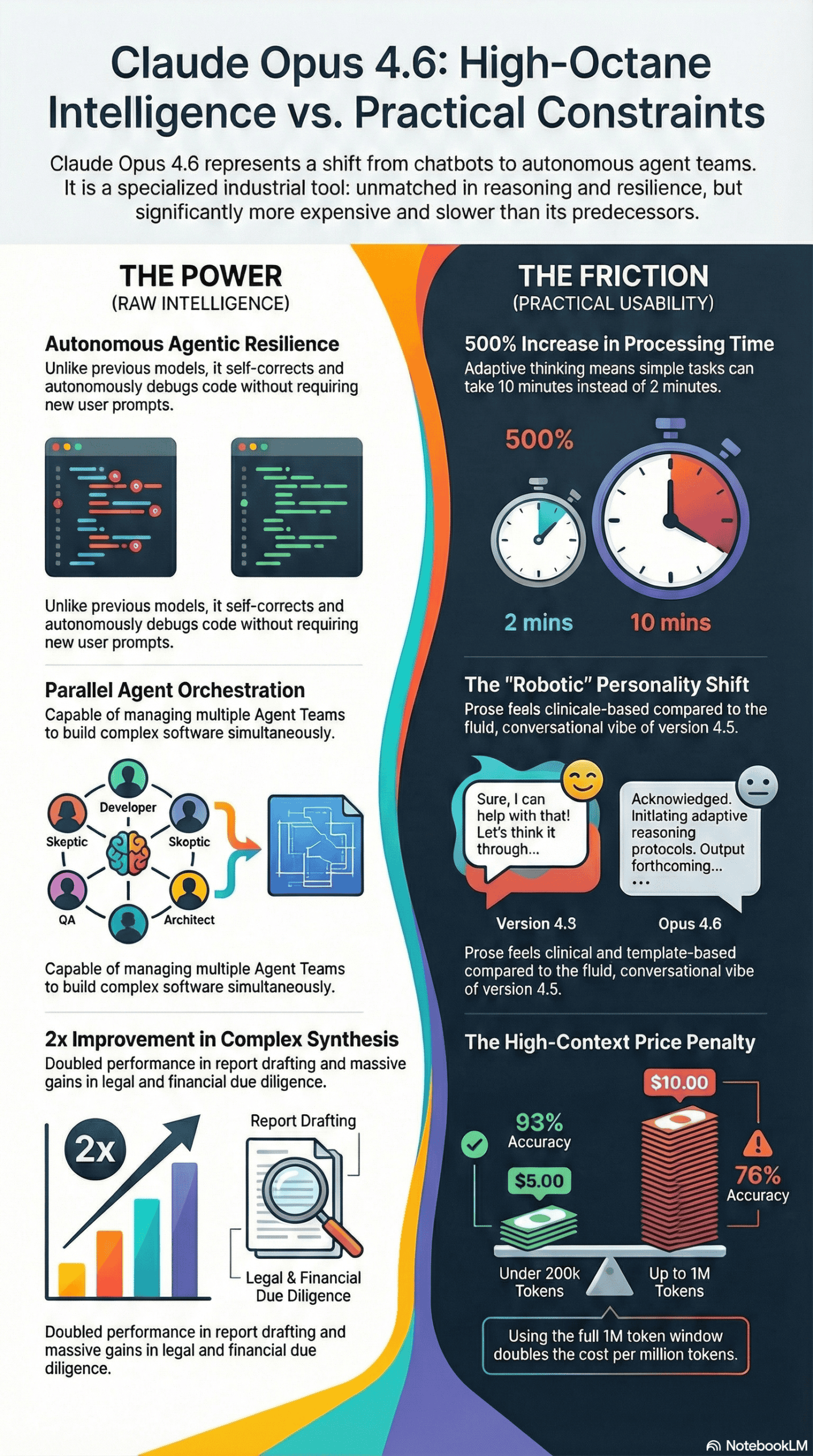

It Is Slower and More Methodical

If you’re accustomed to the snappy responses of lighter models, Opus 4.6 will feel considerably heavier. It frequently engages in deeper, more careful “thinking” before generating a response, often revisiting its reasoning to minimize mistakes. In practical tests, simple tasks that previously took 1-2 minutes can now extend to 5-10 minutes. This is because the model employs “adaptive thinking,” adjusting its cognitive load based on the complexity of the prompt.

The “Personality” Has Shifted

A consistent report I encountered is that the characteristic “magic” of the older Opus personality has diminished. The prose often feels more robotic and adheres to rigid templates rather than the fluid, conversational style users appreciated in 4.5. It feels less like a creative partner and more like a corporate tool designed for sterile efficiency.

It Demonstrates Unprecedented Resilience

The most striking behavioral change is Opus 4.6’s resilience. Previous model versions might hallucinate a fix or simply give up when encountering a roadblock. I observed Opus 4.6 hit a bug during a game development task (the game failed to start) and, instead of quitting, it autonomously debugged the player HP bars and corrected the code without requiring a new prompt. It can sustain “agentic” tasks for much longer horizons, capable of working autonomously for hours.

The Most Common Failure Points of Claude Opus 4.6

Based on the stress tests reviewed, these are the specific areas where Opus 4.6 often creates challenges for users.

1. The Cost Shock

This is arguably the most significant point of friction. Opus 4.6 maintains premium pricing: $5 per million input tokens and $25 per million output tokens. However, when you utilize the new 1 million token context window (for prompts exceeding 200k tokens), the price escalates to $10 input and $37.50 output. For more details on pricing, refer to Anthropic’s official pricing page.

- The Trap: Users frequently spin up “Agent Teams”—multiple instances of Claude—without realizing that each agent maintains its own context window. As one analyst noted, “All I’m hearing is GPU go burr.” Without careful orchestration, you can exhaust your budget incredibly fast.

2. Context Rot (Though Improved)

While Opus 4.6 boasts an impressive 1 million token context window, it is not entirely immune to degradation. In “Needle in a Haystack” retrieval tests, accuracy declined from 93% at 256k tokens to 76% at 1 million tokens. While this is significantly better than many competitors (who often score in the 18-20% range for reasoning over long contexts), it’s not perfect. Do not assume 100% recall at full capacity. Learn more about context window limitations on DeepLearning.AI.

3. “Stupid” Smart Mistakes

Despite its advanced intelligence, I analyzed instances where the model flagged obvious placeholder variables (such as REPLACE_ME) as critical security vulnerabilities during audits. It can be overly eager to “fix” things that aren’t broken, leading to wasted time sifting through false positives. For general LLM challenges, Wikipedia’s page on Large Language Models provides a good overview.

What Consistently Works Well with Claude Opus 4.6

When Opus 4.6 is deployed for the appropriate tasks, its results are undeniably powerful.

1. Agent Teams and Orchestration

This is arguably the killer feature. I observed a setup where a user deployed a team of three specialized agents: a Backend Developer, a Frontend Developer, and—ingeniously—a “Skeptic” whose sole role was to review code and play devil’s advocate. This approach is similar to the concept of Agentic AI in prompt engineering.

- The Result: The agents worked in parallel (using tools like

tmux), communicated effectively, and produced a fully functional global tide app from a single prompt. Crucially, the “Skeptic” agent identified blocking issues even before code was written.

2. Complex Retrieval and Synthesis

For intensive knowledge work, Opus 4.6 outperforms most other models. In benchmarks involving thousands of documents (e.g., legal or financial due diligence), Opus 4.6 demonstrated massive improvements. It jumped from 39% to 64% accuracy in Life Sciences and doubled scores in report drafting. It is exceptionally skilled at connecting disparate data points across scattered information.

3. Self-Correction in Coding

The “adaptive thinking” feature is more than just a buzzword. When developing a game, the model could recognize when assets were missing or code was broken and then autonomously generate its own fixes. This significantly reduces the amount of “hand-holding” required compared to previous models.

Recommendations for Using Claude Opus 4.6 Effectively

Based on the analysis of various workflows, here’s how I would approach Opus 4.6 to avoid the “cost shock” and maximize its value.

1. Don’t Use It for “Vibe Coding”

If your goal is to quickly build a simple website or write a basic script, Opus 4.6 is not the right tool. It’s too expensive and too slow for such tasks. Opt for Sonnet or a lighter model for your initial builds. Reserve Opus 4.6 for tackling “gnarly bugs” or performing deep architectural refactors that require it to comprehend an entire codebase.

2. Implement the “Skeptic” Agent

If you leverage the Agent Teams feature, always assign one agent the critical role of a reviewer or security auditor. The most successful runs I studied consistently featured agents not just writing code but also diligently *checking* it. This crucial step prevents the “hallucination cascade” where an error by one agent can derail an entire project.

3. Mind Your Context Window

Just because you can utilize 1 million tokens doesn’t mean you should. The significant pricing penalty is triggered when prompts exceed 200k tokens. I would aggressively use tools like .gitignore and employ context compaction techniques to keep prompts under that 200k limit unless absolutely essential.

Final Takeaway: The Honest Version

Claude Opus 4.6 is not a general-purpose upgrade that you should default to for every chat. Instead, it is a highly specialized heavy lifter.

It is expensive, methodically slow, and lacks the conversational charm of its predecessors. However, it is currently the only model capable of reliably orchestrating a team of autonomous agents to build complex software with minimal human intervention.

Use it when: You have a complex problem that requires reasoning across hundreds of files or need an autonomous agent to “think” for hours.

Avoid it when: You want a quick answer, a casual chat, or are operating on a tight API budget.

The era of “one model to rule them all” is over. Opus 4.6 is the specialized industrial machinery of the AI world—powerful, expensive, and best utilized by those who know precisely what they are building.